In the mid-1990’s researchers at the University of Texas set out to analyze what people were saying about a new technology called “the Internet”.

These researchers uncovered patterns in the language that people were using to describe this still unfamiliar technology. They found:

- Metaphors associated with space and travel (think “cyberspace” or highways for data)

- Metaphors related to commerce or politics (think libraries, discussion forums or communities)

- Metaphors associated with animals or even intelligent beings (…Skynet anyone?)

Judging by all the column inches devoted to the next version of the Internet, it’s clear that this iteration will impact the real world as much as the last one changed publishing, media, and commerce. However, as in the early days of the Internet itself, people use very different concepts to illustrate its essence and meaning:

- Metaphors about connectivity (when people are talking about 5G, MQTT, ZigBee, LoRa and so on)

- Metaphors about twins (“what NASA built to understand what was going on the space shuttle from earth”)

- Metaphors about data (think “data lakes” or someone saying “data is the new oil”)

- Metaphors about intelligence (consider “machine learning”)

- Metaphors about ecosystems (think “smart home,” “smart cities,” “smart health”)

At Dassault Systemes, we prefer to focus on human experience outputs rather than technology inputs: it’s more interesting to focus on social interactions and their impact on value over “ingredients” like connectivity, data equity or AI. So the focus of this article is about how the virtual world extends and improves the real world by bringing products and the digital lives of people together.

The End of Trend

Controls that help us navigate web media have become second-nature: show young children born after the arrival of touch-sensitive computers, a screen and watch what happens. However, we’ve had time to get there – many of the user experience principles designers use in the context of web interaction were born even before I was.

At the cutting edge, new ways to interact with media-based experiences are emerging. I recently met up with Dirk Songür, at Microsoft’s Mixed Reality Studio in Berlin. He showed me what his team was doing for Microsoft’s Hololens, which reminded me that “the future is already here — it’s just not very evenly distributed.” With Hololens, the user agent that enables interaction is 3D-aware, so something about IoT interactions becomes immediately apparent – the most intuitive virtual metaphor for a physical thing is an intelligent visualization of the item itself.

The emergence of conversational interfaces is also interesting. Another thought leader I recently spoke to, Marco Spies of Think Moto, is currently launching an academy in Berlin to train competence with new forms of user agents that help bridge between products and media – particularly in voice but also using XR. He makes the very valid point that natural language represents the most ubiquitous “human standard” for engaging with media interfaces.

So there are situations where it is sensible to break out of the 2D interaction space that characterizes the web in its current form. However, a bet against the Internet in mass-engagement assumes that open standards will fail to evolve to cope with these new kinds of interface. Many standards are already in the works, and some already even work. A small sample includes WebGL, WebXR, or the Web of Things initiative.

Ultimately, it won’t be user agents or runtime environments that constrain the growth of engaging media interaction with things. Instead, it will be access to content itself, or more precisely, the designer’s ability to build the content they need rapidly – to suit the context of their app. By giving designers this freedom, app development will streamline, and the usability will improve, regardless of the runtime and the means of interaction. We believe that the right approach is to prioritize standards-based delivery formats while supporting specialized application runtimes in their native formats where it’s meaningful and sensible to do so (e.g., when an application targets specialized hardware).

The Thingterface

The fusion of media with things will not rob products of essential functions. Nonetheless, they will become open systems – unbundling user requirements from a product’s original specification and tailoring offerings through media extensions. This will meet user expectations ideally within a specific set of circumstances.

None of this will happen if media designers have to submit their ideas to a product change control board – they must move fast without breaking things. The crux of this engineering challenge is synchronizing collaboration between the developers of things and developers of media – ensuring cohesion between the things and the media as a result.

To deal with these issues, we’re building tools and workflows that allow designers to develop and publish context-specific “thingterfaces” for use within their applications. Tools that are design-aware relative to the thing users will interact with have a positive impact on the “thingterface” in terms of form, function, and behavior. Millions of useful controls will be the result, crafted directly on top of the relevant product definition consisting not only of the physical but also the systems design.

The Remotable

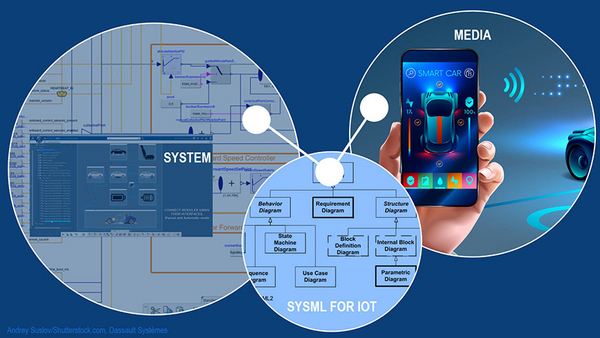

In many situations, a development team working on the design for a new system will already model functional specifications using tools that expose capabilities in standard formats like SysML or the functional mockup interface (FMI).

SysML, in particular, being a UML derivative, is a language that media developers can potentially understand. Required is a set of definitions on top of SysML that fit the communication architectures typical in IoT platforms – allowing thing developers to specify the remote interfaces to their things in a way that can be translated to fit IoT platform or domain-specific nuances. There is some interesting work going on to map SysML to the IOT architecture reference model, which we think will help fill the gap.

FMI is an open standard for orchestrating the testing of complex systems during their development. Simulating and validating a product’s potential performance at an early stage of development requires that modeling is done on the hardware side first. Extending the audience to media developers opens up the possibility to offer a working software development kit well before hardware implementation is complete.

Media for Systems

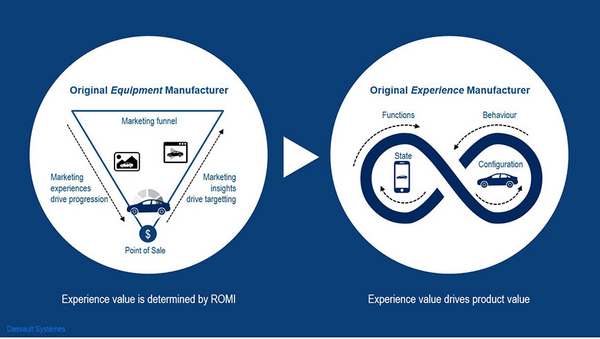

The next generation of the Internet connects things with media to create new kinds of offering for customers. The value of media can be measured not only in their ability to drive the number of units of production sold. Media experiences increase value to customers beyond the constraints of time and space associated with the purely physical elements of product design.

The good news is that standards and tools already exist in engineering toolchains that help synchronize the development of material things and their virtual counterparts. Often, in IoT discussions, this point is overlooked, as conversations about media design tend to focus solely on media-driven metaphors.

By better-connecting systems engineering processes on the hardware side with experience development processes in media, manufacturers can leverage their skills and intellectual property to accelerate the emergence of value-enhancing applications. Supporting the emergence of ecosystems that create value, comprised of things and media, will become a key battleground for hardware brands in an always-on world. In many ways, the effort required to support a media application ecosystem will become equivalent to the support for distribution and retail sales channels today.

This article by Tom Acland is part of a series by the Dassault Systèmes 3DEXCITE Strategy Department. Get involved in the conversation – like, share, comment!