Check out Jan’s AI for EMC Simulation Presentation as part of the Electromagnetic Days on the SIMULIA Community!

Can you tell us about machine learning and how it can benefit electromagnetics?

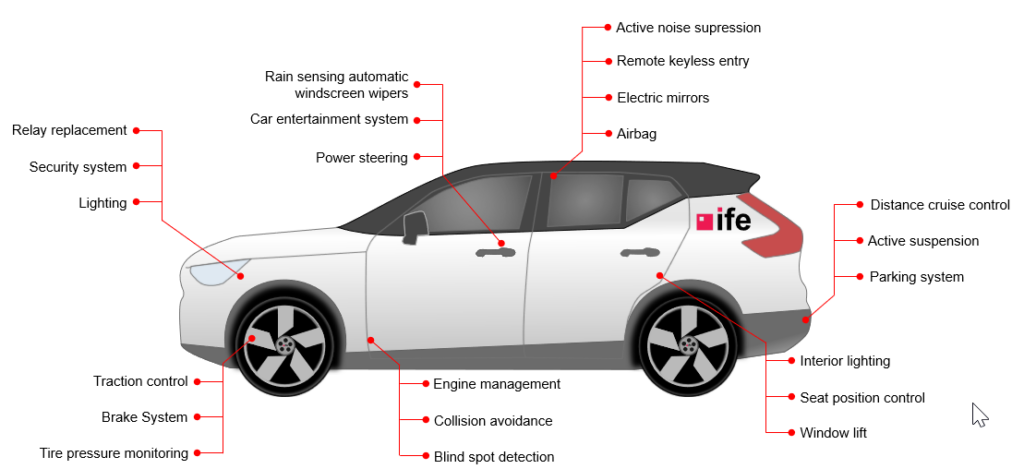

Electronic systems and their electromagnetic coupling paths are very complex. General rules exist and are written down in textbooks. However, there are so many individual electronic designs that it is impossible to predict their electromagnetic interaction rule-based. Usually, within any large company, there are experienced EMC engineers who, based on many years of experience, can best judge the risk for which EMC problems may arise for a system and how they are mitigated. They can do this because they are experts and have seen so many products in their lives, in various situations and operational states.

Machine Learning is about experience. We train a model based on available data. Once the model is sufficiently accurate, we use it to investigate interesting cases that the model is trained for. The main benefit of the model is that it works with quite a number of parameters that can vary, and it computes very fast. In the end, the model mimics what the experienced EMC engineer does: based on some observations, we conclude about similar cases. Of course, the brain of the experienced EMC engineer is much smarter, can infer much more, than any ML model will ever do. But the ML model learns very fast, it can learn 24h a day. And we face so many technological novelties these days. I am convinced that the ML model can assist the EMC engineer to gain knowledge quickly, much quicker than it would be based on individual trial-and-error investigations.

Can you outline the main motivations for integrating AI with EMC simulation in your work at Graz University of Technology?

I have two motivations, which are in different states of maturity. The first one is multi-objective optimization. In product design, there is not that single optimum that I like to find. There are always trade-offs, that is, required quality for a given budget. Therefore, the more money I spend, the higher the quality and lifetime of my product. With less money, I can’t design with the same quality. But what I always like to have is the best possible quality for the money I invest. Now, in product design, there are many of these trade-offs; cost is one, EMC limits, performance and efficiency, thermal design etc. Usually, if you improve on one, it spoils some others. To evaluate these trade-offs, many samples need to be investigated. ML models offer an excellent opportunity to produce these samples.

The second topic is the complexity of EMC models. In particular, at high frequencies, say, the frequency at which lumped element models are not sufficiently accurate anymore and I need to solve electromagnetic problems; I need to collect many parameters to assemble my model. Material parameters, dimensions, device models, for example, for semiconductors, information about the software driving any signals, etc. I could do this, but it takes time. What people usually do is guess for most of the missing information. So, in 3d EM models, there is a lot of parameter uncertainty. I know the ranges of the parameters, but I do not know their accurate value. To make an uncertain model into an accurate one, we need to calibrate these models. Most often, there are some measurements available, at least, the response of the object under study to some excitations. I like to use ML methods to calibrate the uncertain parameters using these measurements. This is a task of statistical inference, that is, we estimate the most likely set of parameters that produce the correct, measured results. Since statistics also needs many model evaluations, an ML model is the only possibility to find this parameter set.

What is a surrogate model and why is it useful for EMC analysis?

There are several definitions of what a surrogate model is. To me, a surrogate model is a behavioral model that mimics the physical response of a system-under-test. This model does not exhibit any physical information about the system, but it is built from physical models, or also from measurements, or both. Also, the surrogates I speak of, are very fast to evaluate, which makes them so powerful. Basically, it is a non-parametric regression model on many parameters. There are many methods. You find neural networks very often because they are easy to implement, but there are many more like Gaussian Processes, Polynomial Chaos Expansion, etc.

As said above, EMC is a lot about case studies and gaining experience. Also, EMC is a secondary product property. Nobody buys a product because of its excellent performance with respect to EM compliance. So, EMC design is confronted with the idea of trade-offs. Give me a product that is as cool as possible in many respects and also passes the EMC requirements. Trade-offs require many model evaluations, and that’s why I need the surrogate.

What is a typical workflow for EMC simulation using AI? How do you connect the CST simulation results to the AI model?

At first, you need an initial sampling set to train your model. Once this is generated, you need the physical information about your system. This you need to acquire from CST. The Python interface is very useful here. Unfortunately, less information about this interface is available than it should be. Once mastered, you can evaluate your model many times on the training data set and compile the surrogate model. You can also do this in several steps; for example, you start on a small training data set, compile a model, and test the model against more available data. If the model is not yet accurate, you generate more training data, particularly in areas where the model is bad, and you continue training. This is called adaptive learning. This is a smart strategy for cases in which the deterministic model needs long computation time. It is actually exaggerating to speak of AI. AI is something that needs thousands or millions of training data. We just work with regression and need some 100 training data, which is, since we are using computationally heavy models, the maximum we can afford.

How do you ensure that these AI-generated surrogate models maintain a high level of accuracy compared to their full-wave counterparts?

This is quite simple. The full-wave model (or any other physical model) is always available. I can test my surrogate against the full-wave model, which I do anyway during my training procedure. Also, when I have computed an optimal parameter configuration in some optimization task using the surrogate, I do always apply this parameter set in the original physical model to check whether the surrogate has predicted well.

What have been the most significant technological advancements in AI that have enabled its application in EMC simulation?

The most exciting feature of AI and ML models is that they break the curse of dimensionality. In high-dimensional spaces, data points tend to become sparse and spread out. The number of data points required to fully sample a given design space grows exponentially with the number of dimensions: to sample a 3D space with 3 samples in each dimension (and three samples is little: it is like min/max/ and one value in between), I need 33=27 samples. If it is 8-dimensional, it is 38= 6561, and with 15 dimensions, it is more than 14 million samples.

ML has solved this problem. Sophisticated sampling strategies exist that work for design spaces of 15 or even 18 dimensions. And with all the cases we have investigated so far, we have never needed more than, say 1000, training samples. This is amazing. And this has worked for many different EMC problems, be it models for EMI filters, for power converters, or for the radiated emission of shielded wires.

In what scenarios is using AI in EMC simulation most beneficial?

We see the immediate benefit of AI in the multi-objective optimization of electronic systems in early design. In early design, there are still many parameters unknown. Using multi-objective optimization, we quickly see benchmarks of best possible designs, for a variety of ‘optimal’ solutions, whatever kind of optimality we look at. And if a hardware sample is already available, we can check how far this realization is from the optimal benchmark. Of course, what the AI does not solve, is how this optimal parameter set is converted into a real, 3d design. But since many optimal designs can be generated, it is possible to quickly nail down a feasible one, which is then proven close to optimal.

Compared to full-wave 3D, how much faster is the surrogate model? When does it become more efficient than 3D (including initial computation and training time)?

Time gain is huge. In general, there are four factors. The time to evaluate the physical model is important (if this is too short, such as in a quick AC circuit simulation, ML is not needed). The number of training data I need is also important. The third one, the time to construct the surrogate is usually negligible against the first two. And the time to evaluate it is very fast. We have models that run within 300microseconds, some take several milliseconds. The more training data is needed, the slower the evaluation of the ML model. On average, we can run some 10 Mio samples a day. Completely impossible with deterministic models. Essentially, I regard an ML model to be a container of solutions rather than a model.

Looking forward, what are the next steps or future developments for your work?

First, I’d like to see the optimal designs that we proposed. If we can prove that with ML, we can quickly find designs that outperform existing ones, then we have excellent proof of the benefit of ML. We are working on electric drives, and our industrial partners will definitely derive real hardware samples from our estimations. I am very much looking forward to seeing these samples and assessing their performance.

Secondly, I am very much interested in the model calibration. We have worked on the initial, simple samples with very promising results. Next, we would like to apply this technique to complex hardware samples. I am really excited to see if we can produce high-frequency EMC models of these with an accuracy much better than we see it today.

Lastly, I am also very interested in training AI models with physical constraints. Right now, the training process is purely statistical; our AI models are not very sophisticated and do not know any physical relations. This is pretty imprudent considering that we have been solving Maxwell Equations for more than 150 years. And now we throw this information overboard and everything is just stochastic. We recently constructed an ML model that understands Kirchhoff’s Current Law, which is a fundamental law of electrical circuits. It is a stochastic method, but we could teach it that whatever it predicts, the sum of the currents of all nodes always is zero. This is a big step forward in equipping ML models with some of the deterministic knowledge that every human engineer obviously has. I hope that by including physical laws in the training, we can reduce the number of training data significantly. This is important for models with long training times. This research is a lot of fun and very interesting, so even if we still have a long way to go, this is much more than a great academic exercise.

Interested in the latest in simulation? Looking for advice and best practices? Want to discuss simulation with fellow users and Dassault Systèmes experts? The SIMULIA Community is the place to find the latest resources for SIMULIA software and to collaborate with other users. The key that unlocks the door of innovative thinking and knowledge building, the SIMULIA Community provides you with the tools you need to expand your knowledge, whenever and wherever.