How to Build an AI-Ready Lab

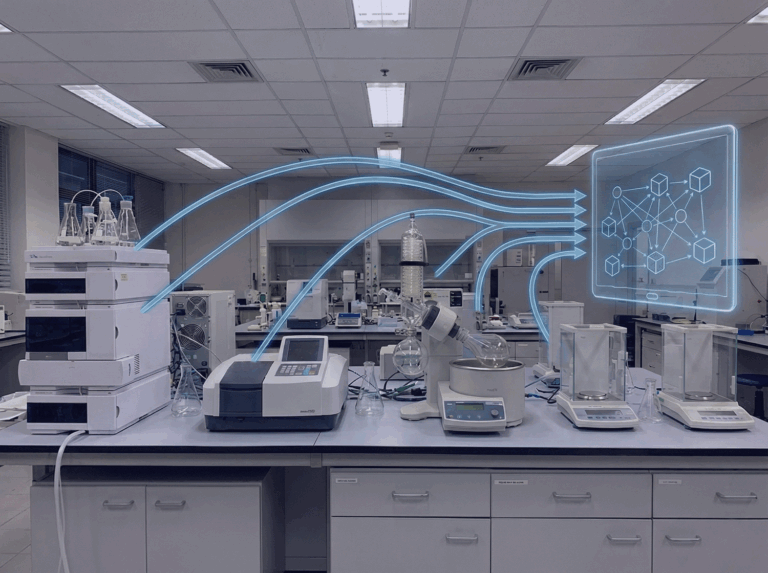

Every organization claims to be leveraging AI to transform R&D, yet few labs are truly prepared. The challenge isn’t a lack of instruments or software, but rather data that is fragmented, inconsistent, and disconnected from the scientific context needed for machine learning, prediction, or generative design. Building an AI-Ready lab is not about installing new algorithms—it’s about building a data foundation that unifies experiments, connects systems, captures metadata, preserves lineage, and creates a continuous flow between real-world science and digital intelligence. This blog explores how to build that foundation from the ground up: establishing the architecture, creating the data lake, developing the knowledge layer, implementing governance, and achieving seamless integration between physical and virtual experiments that ultimately allows AI to accelerate scientific breakthroughs.

Why AI Matters in Modern R&D

For science-driven industries—pharma, biotech, chemicals, materials, and CPG—AI is reshaping how discovery happens. Traditional R&D has been built on slow, sequential, trial-and-error experimentation. AI shifts this toward a fast, predictive, data-driven model. It cuts down the time from idea to optimized candidate, reduces dependence on costly physical experiments, and automates large portions of data processing so scientists can focus on thinking rather than searching, formatting, or cleaning. Modern labs generate massive volumes of complex data; AI is the only technology capable of extracting meaningful patterns from this scale of information. By connecting data across ELNs, LIMS, instruments, modeling systems, and literature, AI uncovers relationships humans would overlook. It adds context—linking results to specific runs, instruments, conditions, and users—making insights more traceable, reliable, and actionable. AI accelerates innovation not by replacing scientists, but by amplifying their ability to explore more ideas, make better decisions, and reach breakthroughs faster.

Today you walk into almost any modern lab, and you’ll see world-class scientists surrounded by cutting-edge instruments. LC/MS systems hum in the background, automation platforms move with precision, and digital notebooks have replaced stacks of handwritten pages.

And yet—despite all the technology—most labs are nowhere near ready for AI.

Why?

Because the single most important ingredient for AI isn’t the algorithm.

It’s the data.

Not just having data.

Not just digitizing data.

But building a data foundation that is complete, connected, contextualized, compliant, and ready to fuel machine learning, predictive models, and generative systems.

1. The Myth: AI Starts with Models.

The Reality: AI Starts with the Lab.

Executives often say they want “AI to improve R&D productivity.”

But AI cannot accelerate decision-making if the data behind those decisions is incomplete or trapped in silos.

Today, scientific data is scattered across:

- ELNs that store experimental descriptions

- LIMS systems that track samples and QC data

- Instruments producing spectroscopy files, images, and chromatograms

- Spreadsheets living on shared or local drives

- PDFs, reports, and PowerPoints documenting meetings

- Databases holding structured records

- Modeling systems generating simulations

Each of these systems is valuable.

None of them—on their own—makes a lab ready for AI.

AI thrives on connection, and most labs are still built on islands.

Building an AI-Ready lab means rethinking your lab not as a set of tools, but as a data ecosystem.

2. The Foundation: A Unified Data Ecosystem

Getting AI-Ready starts with deciding where your data lives, how it flows, and how it connects. Successful AI transformations share a common pattern: they build a data architecture with three interconnected layers—operational databases, a scientific data lake, and a knowledge layer that provides meaning and context.

Databases: The Operational Nervous System

Databases sit under core systems like traditional ELN, LIMS, inventory management, or sample tracking. They store structured, regulated records with strict schemas and high reliability. These databases keep the lab running. They support compliance, traceability, and controlled vocabularies.

But most of the tools only store structured data—and only the subset that fits neatly into tables.

For AI, this is necessary but nowhere near sufficient.

The Data Lake: The Scientific Memory of the Lab

If a database is the lab’s nervous system, the data lake is its long-term memory.

A modern laboratory produces enormous amounts of unstructured scientific content:

- Raw instrument data

- High-resolution assay images

- NMR spectra and chromatograms

- ELN attachments

- Simulation outputs

- PDFs and presentations

- Sensor logs

- Robotic workflow files

None of these fits well in a traditional database. All of it is essential for powerful AI.

A scientific data lake accepts data in any format—structured, semi-structured, or unstructured—and stores it as-is. When AI or analytics tools need it, the structure is applied on demand. This flexibility is what makes the data lake the heart of an AI-Ready lab.

The key is to ensure all data—experimental, analytical, simulation, formulation, recipe, process—flows into this environment with full metadata captured.

The Knowledge Layer: Turning Data into Insights

Data alone cannot power AI. AI needs context.

A knowledge layer provides that context by enforcing consistent vocabularies, capturing rich metadata, and preserving data lineage so that every experiment, batch, formulation, analytical result, and scientific conclusion is connected. This is what turns isolated files into connected science. When relationships between data points are explicit, AI systems can interpret how inputs drive outcomes, learn more efficiently, and generate better predictions with fewer experiments.

A common way to build this semantic foundation is using RDF — the Resource Description Framework — which structures information as a web of linked relationships. In this model, the knowledge layer becomes not just a place where data is stored, but a system that truly understands how the pieces fit together. This is the moment when AI shifts from processing data to accelerating discovery.

Learn how BIOVIA ONE Lab connects all on one platform.

3. Creating Flow: Connecting Instruments, Systems, and the Data Platform

An AI-Ready lab cannot tolerate manual uploading, naming files inconsistently, or storing critical assay results in somebody’s folder called “Final_v3_EDITED_2.xlsx.”

Data must move automatically from:

Instruments → Lab Systems → Data Lake → Knowledge Layer → AI Models

This requires:

- Instrument connectivity

- API-driven system integrations

- Workflow orchestration

- Automated metadata capture

- Templates that enforce scientific consistency

When every experiment is automatically captured, tagged, stored, and contextualized, the lab becomes a continuous source of machine-readable knowledge.

That is the moment AI becomes not just possible—but powerful.

4. Preparing Data for AI: Cleaning, Curation, and Connection

Before data flows into the lake, it should be automatically prepared for use in AI models. Key tasks include:

- Standardizing units and formats

- Aligning naming conventions

- Removing redundancy

- Linking data across systems

- Annotating with metadata

- Capturing lineage and uncertainty

- Scoring data quality

- Creating curated training sets

These steps turn raw science into computable science, ready for machine learning, predictive modeling, and generative design. This is where the lab becomes a true partner to AI.

5. Data Governance: The Quiet Hero of AI Success

Every company wants AI.

Very few want the discipline required to make AI successful.

Data governance is not glamorous.

But it is the difference between:

- An AI system that reinforces noise

- And an AI system that accelerates discovery

Governance defines:

- How experiments are documented

- What metadata must be captured

- How results are named and structured

- Who owns and stewards each data set

- How versions and audit trails are handled

- How quality is measured and monitored

- How compliance is enforced

Without governance, a data lake becomes a data swamp.

With governance, it becomes a scientific engine.

6. Integrate Real and Virtual Experiments

AI-Ready labs unify physical and virtual experimentation into a single, continuous scientific process. What happens on the bench is instantly connected to what happens in silico — from molecular simulation and materials modeling to predictive formulation, virtual twins, and generative AI that proposes new hypotheses. This fusion is now essential across chemicals, materials, life sciences, and consumer products, enabling teams to explore more possibilities, make faster decisions, and arrive at breakthrough innovations with greater confidence.

The AI-Ready lab becomes a feedback loop:

- AI designs or predicts candidates

- Lab executes and generates real-world results

- Results flow back into the AI models

- Models become smarter, workflows accelerate

This loop only works when data flows seamlessly across systems.

7. Build the AI Layer: Models, Analytics, and Scientific Learning Loops

After the data foundation is built, AI can start delivering real value. While specific applications vary by industry, many organizations see similar patterns in how AI enhances scientific work.

AI use cases differ by industry, but common ones include:

Chemicals & Materials

- Predictive materials design

- Simulation-augmented lab testing

- Property prediction

- Generative design of polymers, catalysts, coatings

CPG & Formulation

- Predictive formulation optimization

- Sensory and texture modeling

- Ingredient substitution

- Sustainability-driven formulation redesign

Pharma & Biotech

- Assay optimization

- Biologics design

- Analytical method development

- Reaction prediction

AI becomes a natural extension of scientific workflows—not an afterthought.

8. Toward the AI-Driven Lab: Closing the Loop

When the foundation is in place, the lab evolves rapidly:

Experiments feed models.

Models propose new experiments.

Robotics executes them.

Data flows back automatically.

The model improves.

The cycle continues.

This self-improving loop—where the Virtual + Real worlds reinforce each other—is the future of scientific R&D.

It is the destination of an AI-Ready lab.

And it is only possible because the data foundation is strong.

The Takeaway: AI in the Lab Begins with Data

AI is not something you add at the end of digital transformation.

It is something you start building from the beginning.

An AI-Ready lab is built on:

- A modern data architecture

- Seamless data flow

- Strong governance

- High-quality digital systems

- A unified scientific data model

- A culture of data discipline

When you get the data right, AI becomes a natural extension of the lab—an intelligent layer woven into every experiment, every decision, and every discovery. This approach is how leading companies transform their labs, make AI real and reliable and build the future of R&D.

Discover how BIOVIA helps organizations transform their labs into:

📩Want to find out the latest news about BIOVIA events, customer stories, blogs and more? Join the BIOVIA newsletter today!